EfficientNet 모델 구조

EfficientNet 정리 글 : [AI Research Paper Review/More] - EfficientNet 정리

EfficientNet 정리

이전글 : [AI/Self-Study] - EfficientNet 모델 구조 EfficientNet 모델 구조 EfficientNet - B0 baseline 네트워크 구조 EfficientNet B0 전체 모델 구조 파악 MBConv1 Block 구조 (= mobile inverted bottleneck..

lynnshin.tistory.com

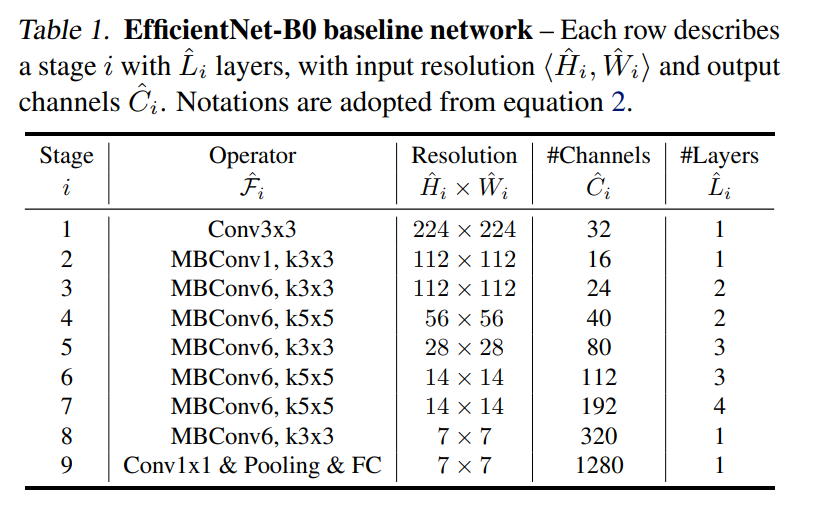

EfficientNet - B0 baseline 네트워크 구조

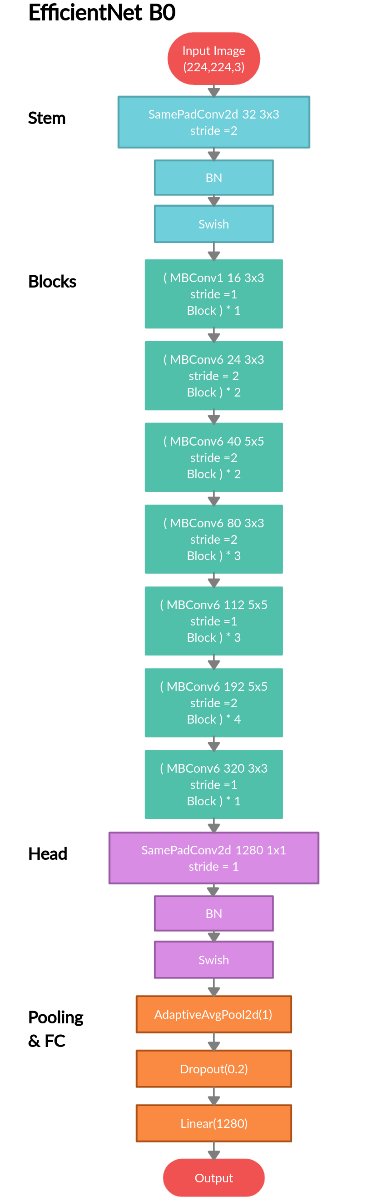

EfficientNet B0 전체 모델 구조 파악

MBConv1 Block 구조 (= mobile inverted bottleneck convolution)

- MobileNetV1 and MobileNetV2

- Depthwise Separable Convolution

- Squeeze-and-Excitation Networks

< 참고 >

논문 :

EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks arxiv.org/pdf/1905.11946.pdf

EfficientNet Google AI Blog ai.googleblog.com/2019/05/efficientnet-improving-accuracy-and.html

Squeeze-and-Excitation Networks arxiv.org/pdf/1709.01507.pdf

MobileNetV2 arxiv.org/pdf/1801.04381.pdf

MobileNetV3 arxiv.org/pdf/1905.02244v5.pdf

코드 : github.com/zylo117/Yet-Another-EfficientDet-Pytorch

블로그 :

Image Classification with EfficientNet: Better performance with computational efficiency

In May 2019, two engineers from Google brain team named Mingxing Tan and Quoc V. Le published a paper called “EfficientNet: Rethinking…

medium.com

[AI 이론] Layer, 레이어의 종류와 역할, 그리고 그 이론 - 5 (DepthwiseConv, PointwiseConv, Depthwise Separable Co

Depthwise Convolutional Layer Depthwise Conv 레이어는 우선 Tensorflow 2.X 에서는 기본 레이어 함수로 지원하나, PyTorch에서는 지원하지 않는다. 그렇다고 만들기 어렵지는 않다. 우선은 Depthwise Conv 레이..

underflow101.tistory.com

EfficientNet: Summary and Implementation hackmd.io/@bouteille/HkH1jUArI

eli.thegreenplace.net/2018/depthwise-separable-convolutions-for-machine-learning/

Depthwise separable convolutions for machine learning - Eli Bendersky's website

April 04, 2018 at 06:21 Tags Math , Machine Learning , Python Convolutions are an important tool in modern deep neural networks (DNNs). This post is going to discuss some common types of convolutions, specifically regular and depthwise separable convolutio

eli.thegreenplace.net

towardsdatascience.com/squeeze-and-excitation-networks-9ef5e71eacd7

Squeeze-and-Excitation Networks

Setting a new state of the art on ImageNet

towardsdatascience.com

[논문정리] MobileNet v2 : Inverted residuals and linear bottlenecks

MobileNet v2 : Inverted residuals and linear bottlenecks MobileNet V2 이전 MobileNet → 일반적인 Conv(Standard Convolution)이 무거우니 이것을 Factorization → Depthwise Separable Convolution(이하 DS..

n1094.tistory.com

YouTube :

PR-169: EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks #TensorFlow-KR 논문읽기모임

'AI > Self-Study' 카테고리의 다른 글

| Keras : ImageDataGenerator 대신에 tf.data로 빠르게 학습하기 1 (0) | 2021.04.12 |

|---|---|

| Keras에서 predict와 predict_generator 가 다른 값을 내는 경우 (Image Data Generator) (0) | 2021.04.09 |

| ArcFace - ResNetFace / SE-LResNet50E-IR (2) | 2021.04.09 |

| K-Fold Cross Validation 딥러닝 (Keras, Image Data Generator) (0) | 2021.04.08 |

| tensorflow 모델 학습 시간 보기 (0) | 2021.01.14 |

댓글

이 글 공유하기

다른 글

-

Keras에서 predict와 predict_generator 가 다른 값을 내는 경우 (Image Data Generator)

Keras에서 predict와 predict_generator 가 다른 값을 내는 경우 (Image Data Generator)

2021.04.09 -

ArcFace - ResNetFace / SE-LResNet50E-IR

ArcFace - ResNetFace / SE-LResNet50E-IR

2021.04.09 -

K-Fold Cross Validation 딥러닝 (Keras, Image Data Generator)

K-Fold Cross Validation 딥러닝 (Keras, Image Data Generator)

2021.04.08 -

tensorflow 모델 학습 시간 보기

tensorflow 모델 학습 시간 보기

2021.01.14